About DeepCert

The robustness of neural network classifiers to small input perturbations has been the subject of intense research in recent years. Inferring the robustness of classifiers when subject to perturbations likely to be observed within the operational context is not, however, generally possible. Research to determine the robustness of classifiers to contextually meaningful perturbations, e.g. their performance when the input is an image affected by fog or changes in lighting, remains underexplored.

The robustness of neural network classifiers to small input perturbations has been the subject of intense research in recent years. Inferring the robustness of classifiers when subject to perturbations likely to be observed within the operational context is not, however, generally possible. Research to determine the robustness of classifiers to contextually meaningful perturbations, e.g. their performance when the input is an image affected by fog or changes in lighting, remains underexplored.

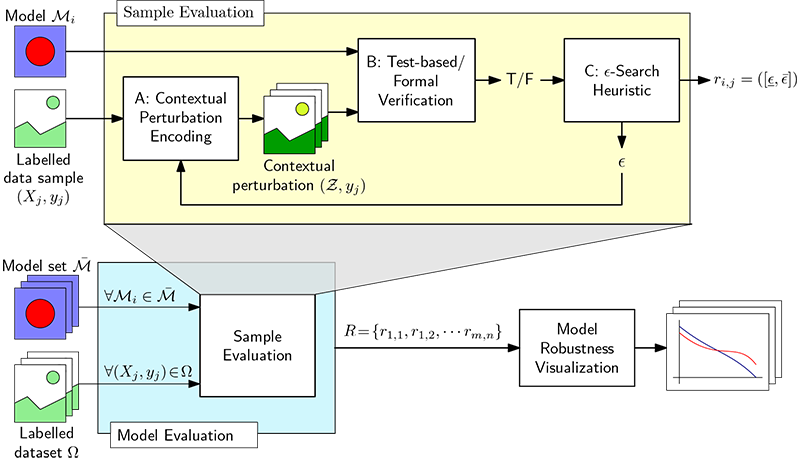

Our tool-supported method for the verification of neural network classifiers subjected to contextually meaningful perturbations allows for:

- The formal encoding of contextually relevant image perturbations at quantified perturbation levels ε ∈ [0,1].

- The verification of contextually relevant DNN robustness, to establish how the accuracy of a DNN degrades as the perturbation level ε increases. DeepCert can perform this verification using either test-based (fast but approximate) or formal verification (slow but providing formal guarantees).

- The generation of contextually relevant counterexamples. These counterexamples provide engineers with visually meaningful information about the level of blur, haze, etc. at which DNN classifiers stop working correctly.

- The selection of DNNs appropriate for the operational context:

- envisaged when a safety-critical system is designed, or

- observed by the deployed system during operation.

We illustrate our method using two well known image classification problems in the presence of three types of contextual perturbation. We demonstrate how models may be verified using test-based verification within the framework and how formal verification can be integrated for a subset of the problem space. Using the framework we show how model performance varies depending on the type of perturbation encountered and how the counter examples produced allow for iterative model improvement.

Downloading the tool

We implemented our method using a Python framework which we have made available on our tool website. The repository includes all models used in the paper, the code for the DeepCert tool with the encoded perturbations presented in the paper, the supporting scripts required to generate the performance visualisations and instructions on how to use the framework. In addition, a version of Marabou is provided with a Python interface in which the haze perturbation from the previous section is encoded. Our tool is available for download from our repositoryContacting us

If you wish to contact the authors then please use the following email addresses:

Colin Paterson: colin.paterson@york.ac.uk